Release Notes - Compare answers

Supporting intelligent annotation

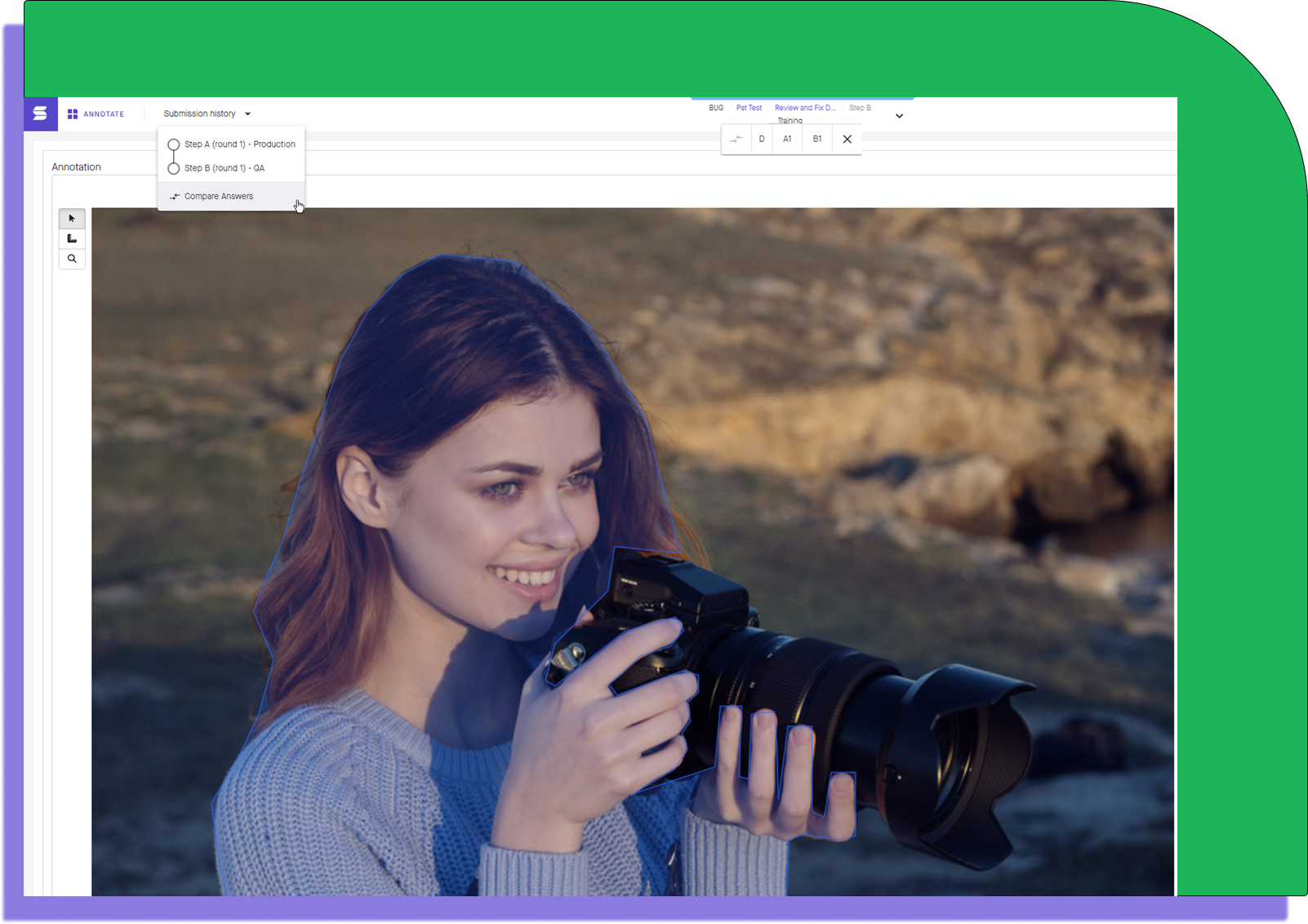

In an effort to enable increasingly complex workloads, you can now directly compare answers submitted across steps on a task.

Comparing answers between different sets of submitted annotation data can vastly improve validation workflows.

With the read-only workspace, you used to only be able to examine submitted annotations for a single step. While this is useful for reviewing submitted work, it limits your view of the lifecycle of a task. You may want to compare a reviewer’s answers to an annotator’s, or you may want to compare an answer submitted by a human to a model prediction.

How comparing answers can help

Comparing answers is an expansion of the annotation review workflow. With the release of this feature, new options have been added in the workspace to benefit validation.

Toggle between sets of answers in the workspace

When you're in the workspace of a project with answering comparison, you can seamlessly switch between sets of answers submitted as either associate annotations, or as pre-annotations.

This behavior is similar to the current review workflow; however, with answer comparison, the page doesn't reload. This means that, when panning to a specific location on the canvas, your camera will remain in the location which switching between sets. With this change to the workflow, comparing shapes and their locations is greatly simplified.

Pick between submitted or default answers

In addition to providing the ability to seamlessly switch between steps, you can also switch between the originally submitted default annotations and each submitted answer. When toggling between different answers, you'll be able to see how each annotation changed during the process.