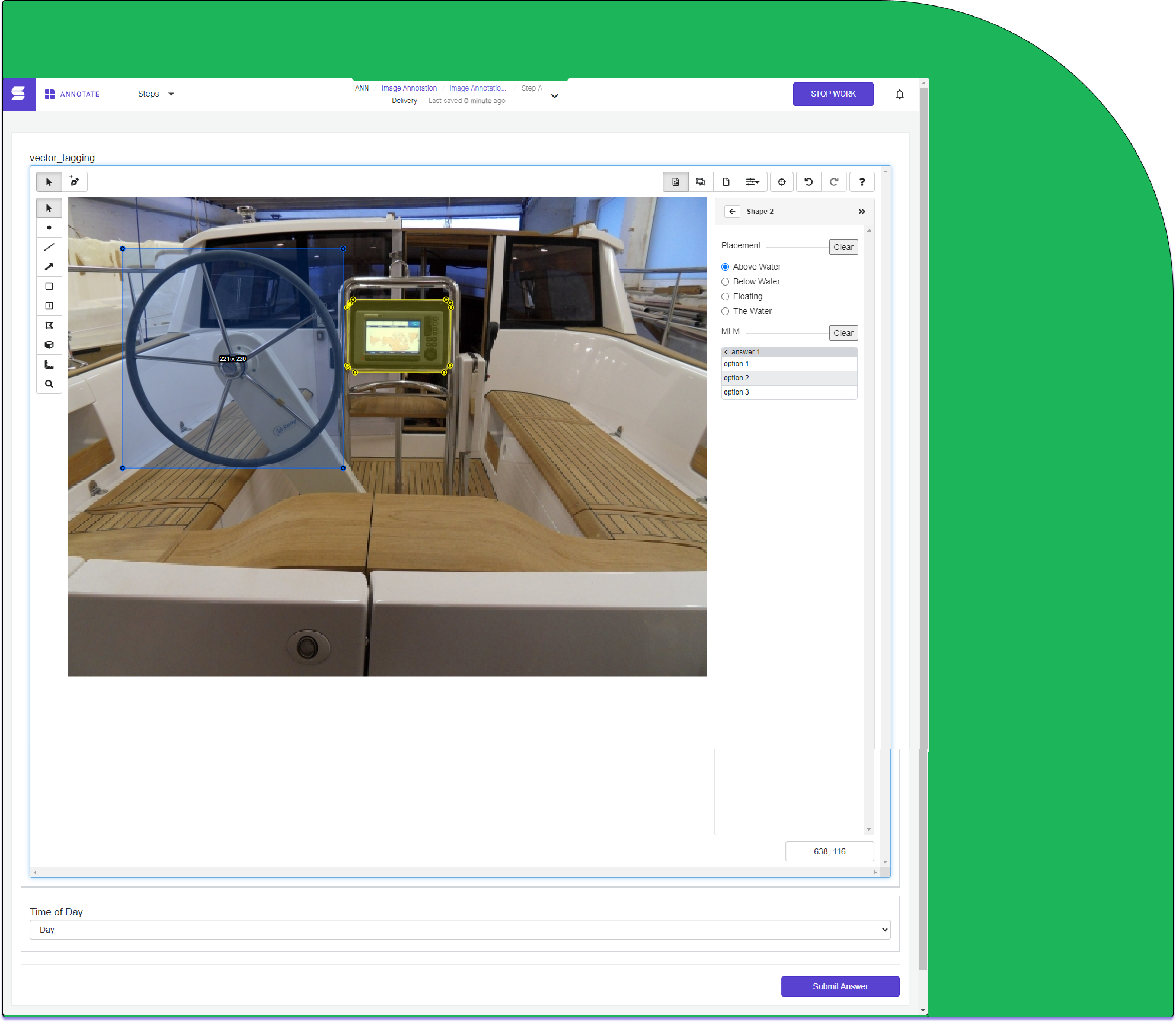

2D Vector annotation

Image annotation

Vector annotation, is a type of workspace output which overlays shapes onto an image or video to identify or track an object. These shapes are placed within two-dimensions (x and y coordinates) and apply to multiple shape types, ranging from points and lines, to rectangles, to multipoint polygons. There are additional controls within each image to track and isolate specific shapes, or to move across multiple frames in the case of video. Applications of vector annotations are wide-ranging and can apply to multiple scenarios:

- Movement tracking with key point annotations to capture limbs and torso of a person.

- Single item object tracking with a bounding box for road sign detection within a residential street.

- Instance segmentation with polygons to capture each item within a scene.

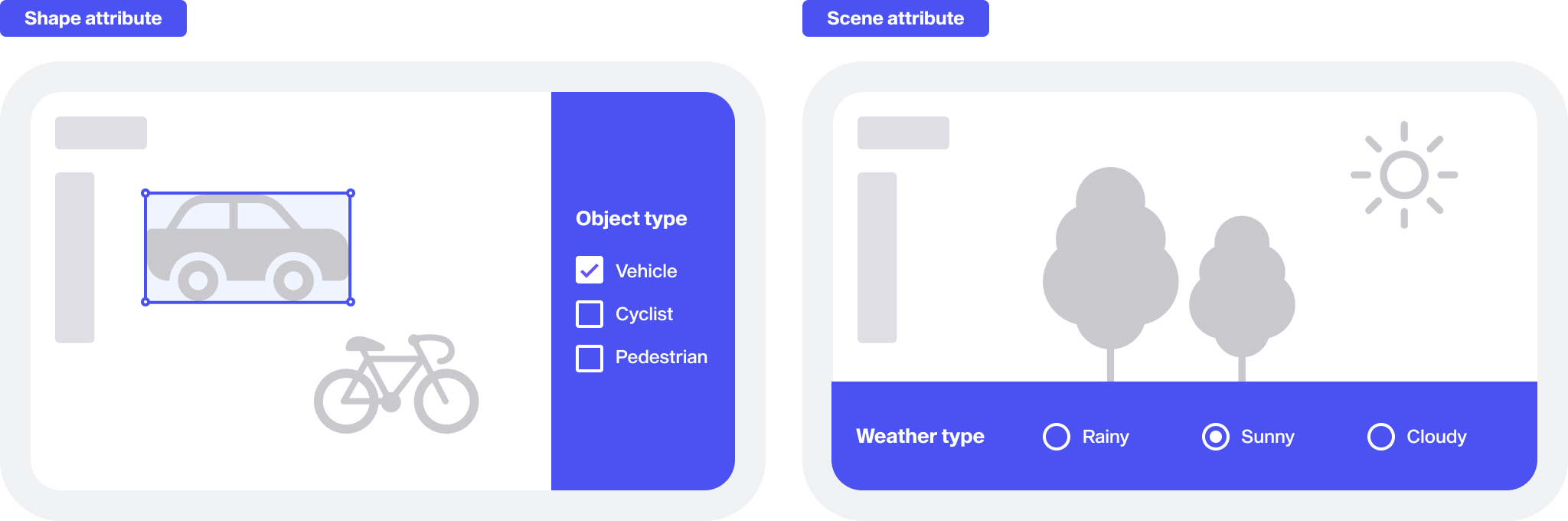

Shape and Scene attributes

Two different types of attributes can be added to collect additional information about the asset that is being annotated.

- Shape attributes: Describe the characteristics of a specific shape in the workspace. These attributes are shown in a side panel that appears when you click on a particular shape in the annotation workspace.

- Scene attributes: Describe the characteristics of the entire scene in the workspace. These attributes are shown underneath the annotation workspace.

Supported formats

- .jpeg

- .jpg

- .png

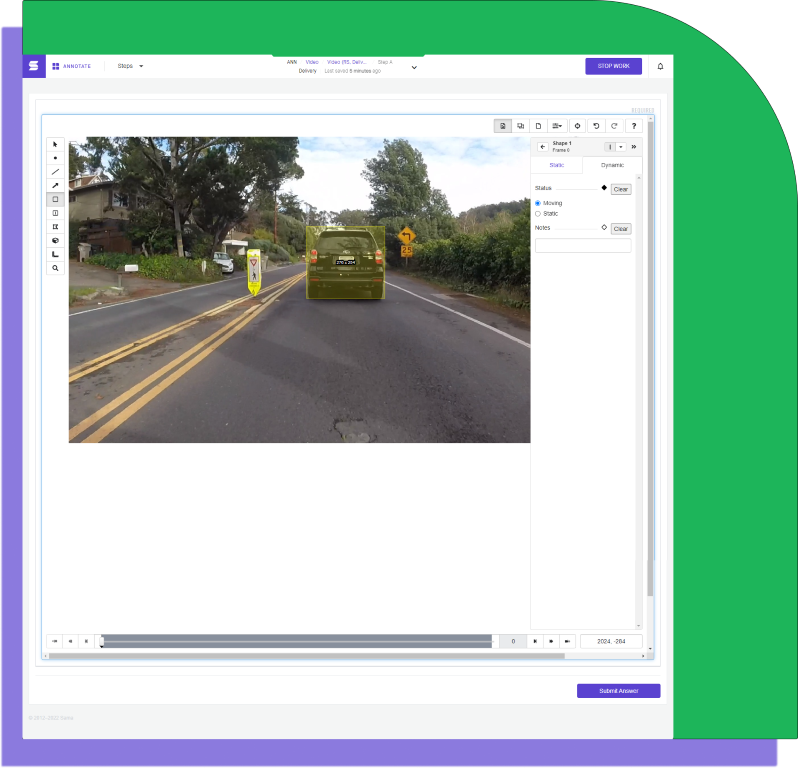

Video annotation

Video annotation for vector assets borrows the overall format and tools from vector image annotation. While each asset in an image workspace is standalone, video annotation allows for sequential image frames or video files to be used as the asset source.

Shape and Scene attributes can be applied to video workspaces in the same way as image workspaces, with the addition of dynamic answers. Dynamic answers allow variable flags to be set, such as movement or frame notes.

Supported formats

- .avi

- .mkv

- .mov

- .mp4

or image frames in subfolders or a .zip file with the following sequence:

0.jpg, 1.jpg, 2.jpg, ..., 99.jpg, etc.

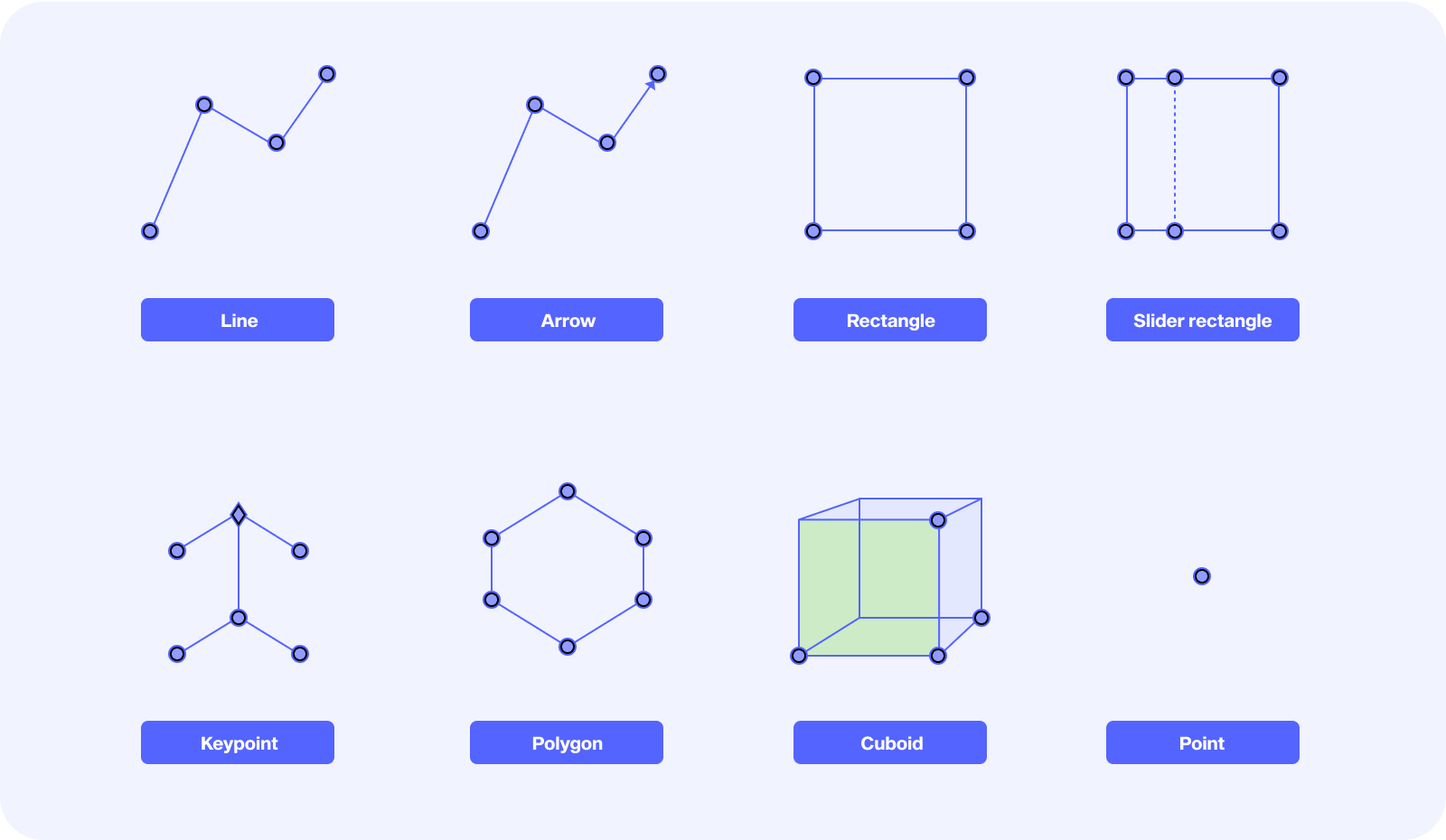

Vector tools

Examples are shown below of what each type of vector tool looks like in the workspace, as well as how the results of that tool are structured in JSON export files.

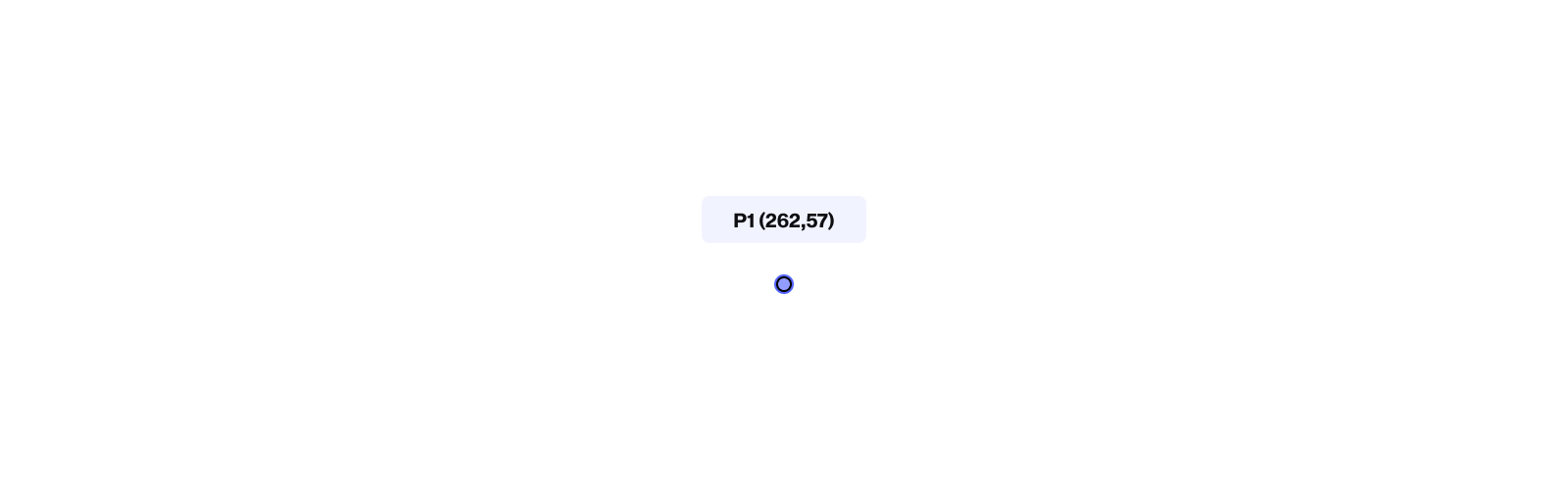

Point

"shapes": [

{

"tags": {

...

},

"type": "point",

"index": 1,

"key_locations": [

{

"tags": {

...

},

"points": [

[

262,

57

]

],

"visibility": 1,

"frame_number": 0

}

],

...

}

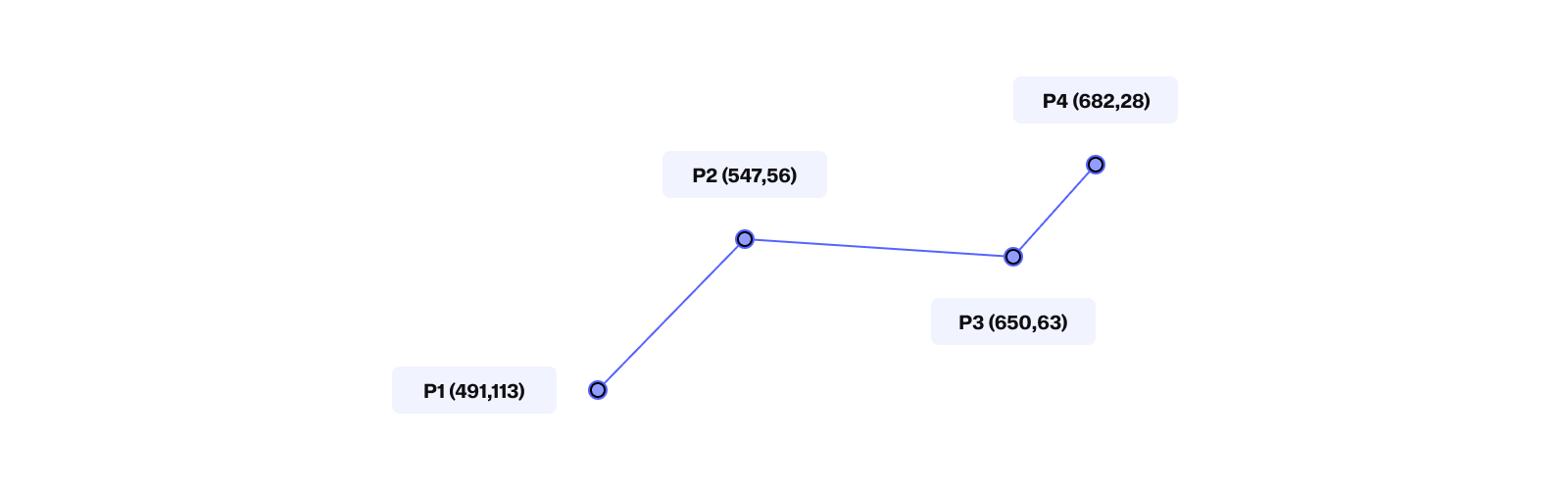

Line

"shapes": [

{

"tags": {

...

},

"type": "line",

"index": 2,

"key_locations": [

{

"tags": {

...

},

"points": [

[

491,

113

],

[

547,

56

],

[

650,

63

],

[

682,

28

]

],

"visibility": 1,

"frame_number": 0

}

],

...

}

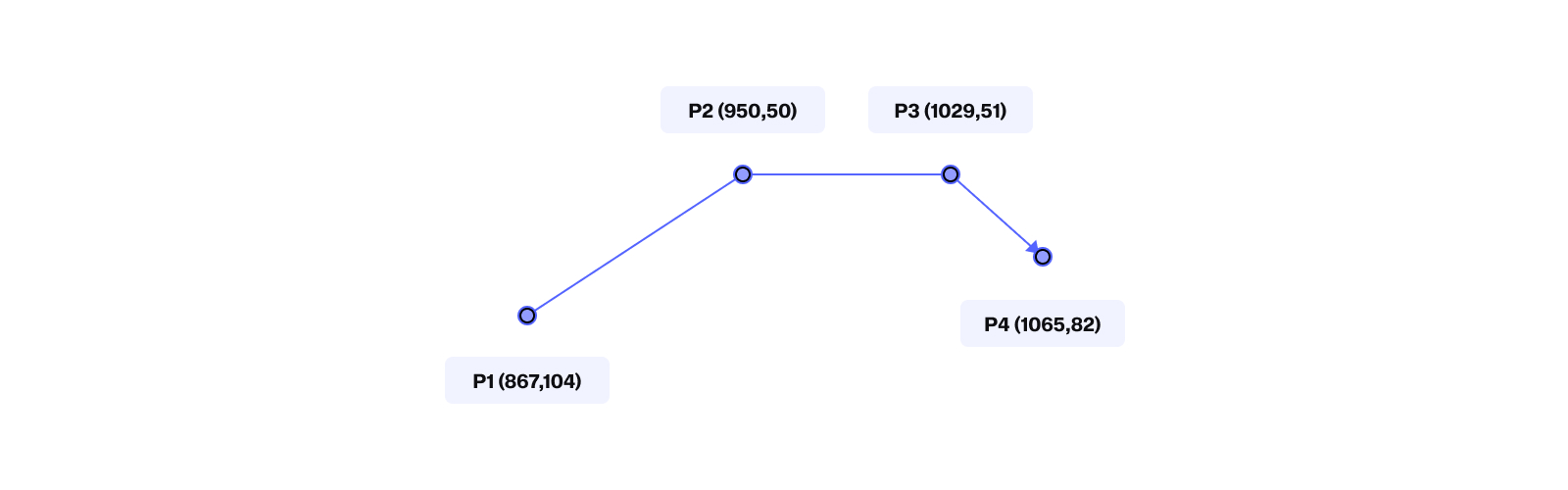

Arrow

"shapes": [

{

"tags": {

...

},

"type": "arrow",

"index": 3,

"key_locations": [

{

"tags": {

...

},

"points": [

[

867,

104

],

[

950,

50

],

[

1029,

51

],

[

1065,

82

]

],

"visibility": 1,

"frame_number": 0

}

],

...

}

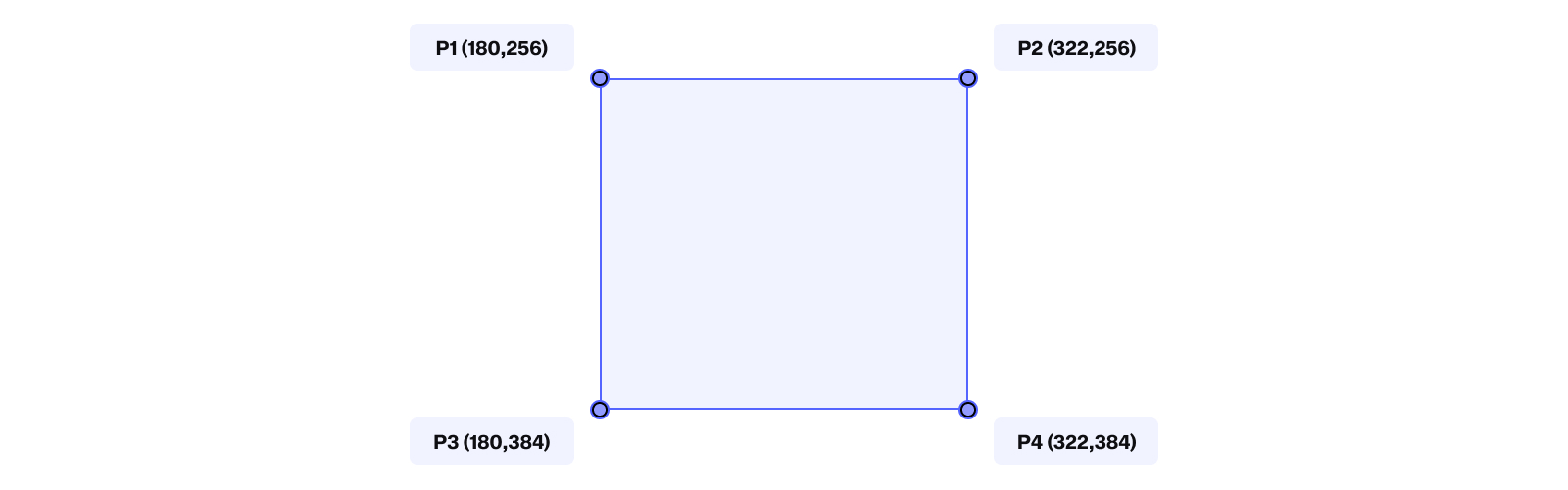

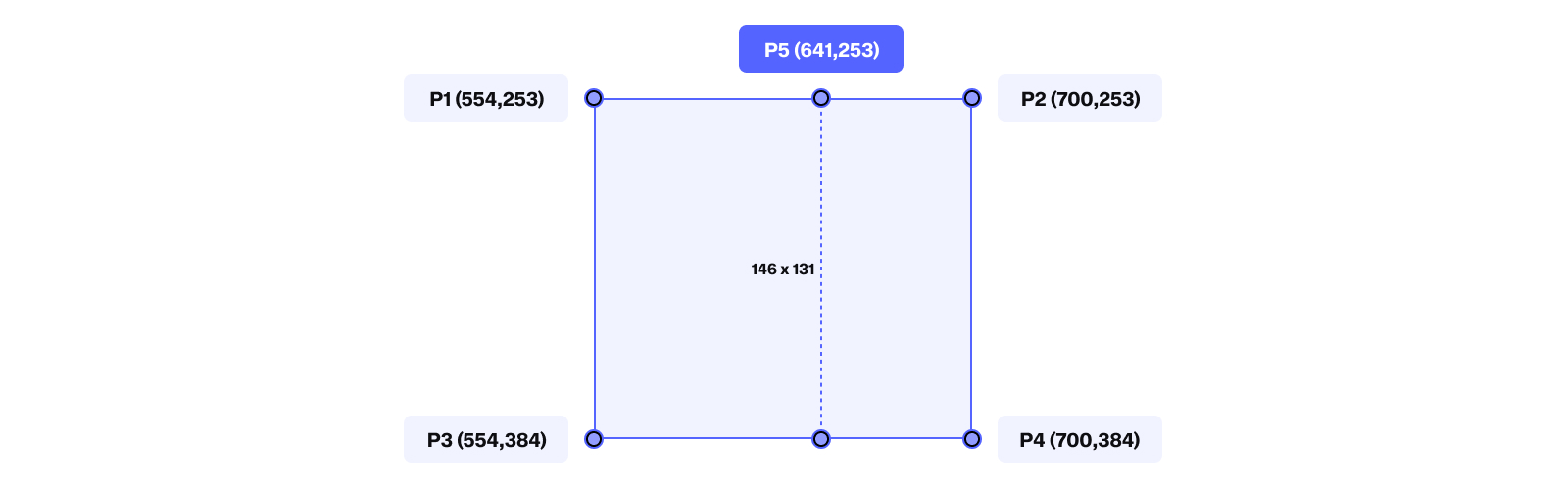

Rectangle

"shapes": [

{

"tags": {

...

},

"type": "rectangle",

"index": 4,

"key_locations": [

{

"tags": {

...

},

"points": [

[

180,

256

],

[

322,

256

],

[

180,

384

],

[

322,

384

]

],

"visibility": 1,

"frame_number": 0

}

],

...

}

Slider rectangle

"shapes": [

{

"tags": {

...

},

"type": "slider_rectangle",

"index": 5,

"key_locations": [

{

"tags": {

...

},

"points": [

[

554,

253

],

[

700,

253

],

[

554,

384

],

[

700,

384

],

[

641,

253

]

],

"visibility": 1,

"frame_number": 0

}

],

...

}

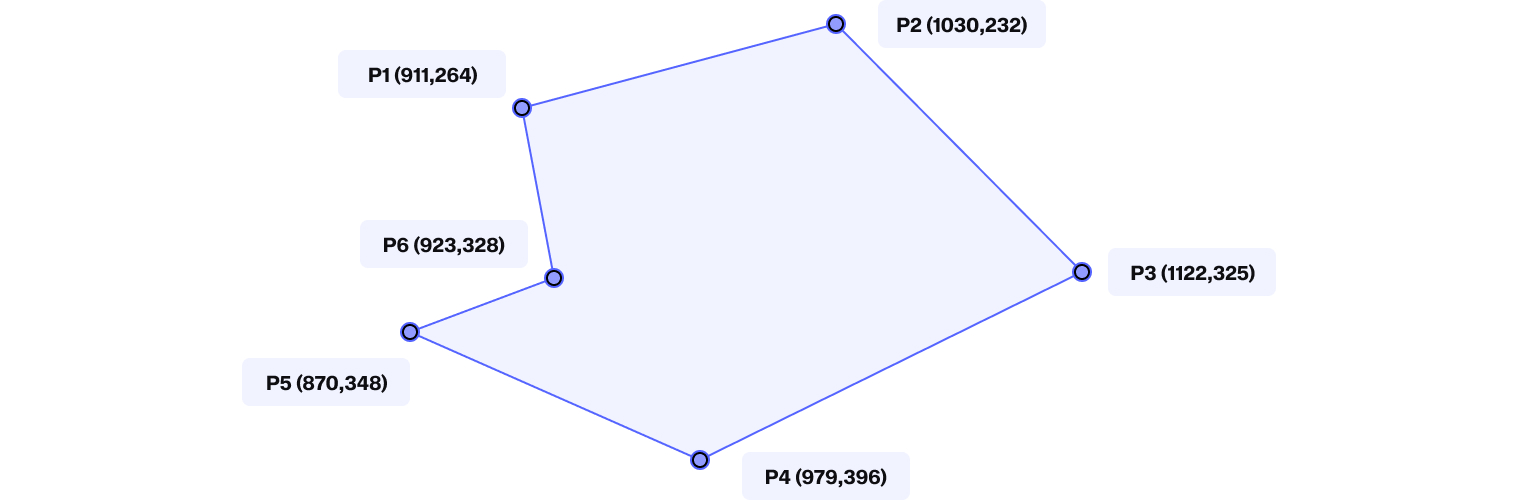

Polygon

"shapes": [

{

"tags": {

...

},

"type": "polygon",

"index": 6,

"key_locations": [

{

"tags": {

...

},

"points": [

[

911,

264

],

[

1030,

232

],

[

1122,

325

],

[

979,

396

],

[

870,

348

],

[

923,

328

]

],

"visibility": 1,

"frame_number": 0

}

],

...

}

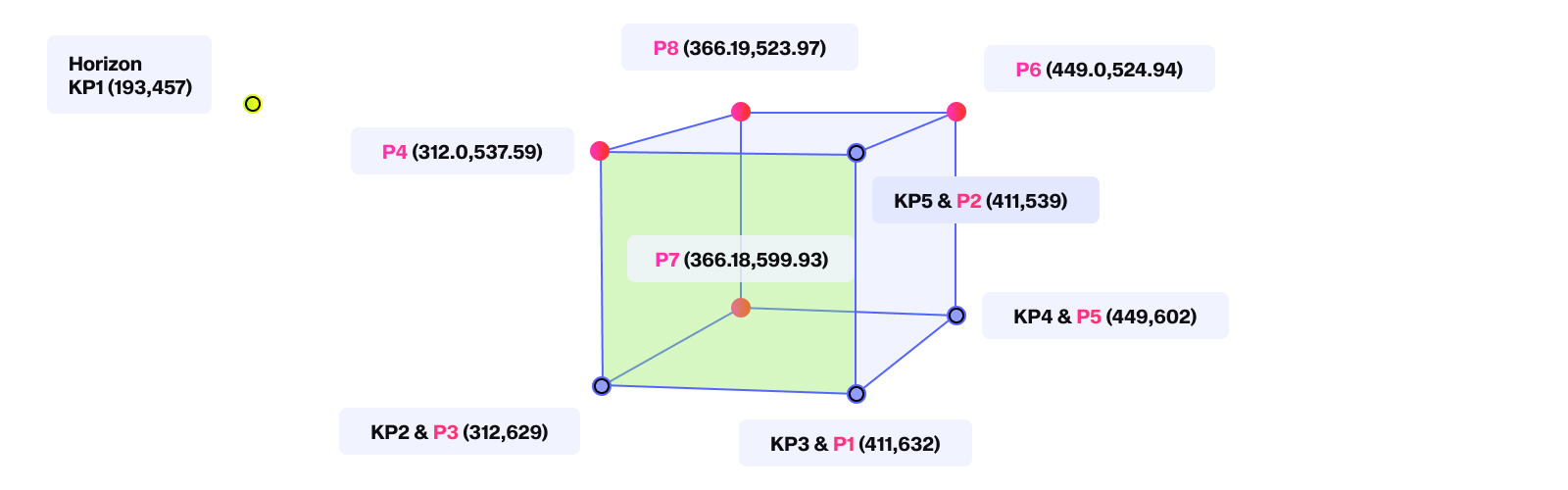

Cuboid (2D)

2D cuboids are described in the JSON output file as two sets of points: points and keypoints (denoted by P and KP, respectively, in the figure above).

For the keypoints:

- KP1 corresponds to the horizon

- KP2 and KP3 define which of the six sides of the cube is facing forward (shown by a yellow face in the workspace)

- KP4 denotes the depth of the cube

- KP5 denotes the height of the cube

For the points:

The points are computed from the keypoints above.

- P1, P2, P3, and P4 correspond to the corners of the front face of the cube

- P5, P6, P7, and P8 correspond to the corners of the back face of the cube

"shapes": [

{

"tags": {

...

},

"type": "cuboid",

"index": 7,

"key_locations": [

{

"tags": {

...

},

"points": [

[

411,

632

],

[

411,

539

],

[

312,

629

],

[

312.0,

537.5942857142857

],

[

449,

602

],

[

449.0,

524.9428571428572

],

[

366.1883084984444,

599.9343376453251

],

[

366.1883084984444,

523.9749467823809

]

],

"visibility": 1,

"frame_number": 0,

"key_points": [

[

193,

457

],

[

312,

629

],

[

411,

632

],

[

449,

602

],

[

411,

539

]

]

}

],

...

}

Keypoint

In the JSON export file, the keypoint options (classes and connections) are summarized in the nested JSON object _extras, as shown below.

{

"tasks": [

{

"id": "6124f0ceda72d104a1e0b23b",

"project_id": 8613,

"priority": 0,

"created_at": "2021-08-24T13:17:34.241Z",

"state": "completed",

"data": {

...

},

"answers": {

...

},

"batch_id": 70718,

"task_url": "https://api.sama.com/projects/8613/tasks/6124f0ceda72d104a1e0b23b",

"_extras": {

"keypoint_options": {

"Video": {

"classes": {

"Keypoint Class": {

"points": [

"Keypoint 1",

"Keypoint 2",

"Keypoint 3",

"Keypoint 4",

"Keypoint 5",

"Keypoint 6"

],

"connections": [

{

"from": "Keypoint 1",

"to": "Keypoint 2"

},

{

"from": "Keypoint 1",

"to": "Keypoint 4"

},

{

"from": "Keypoint 1",

"to": "Keypoint 3"

},

{

"from": "Keypoint 4",

"to": "Keypoint 5"

},

{

"from": "Keypoint 4",

"to": "Keypoint 6"

}

]

}

}

}

}

}

}

]

}

Updated over 2 years ago